Quantum information and computation theory researcher.

ABOUT

I am a researcher in quantum information and computation theory. Currently, I am a postdoctoral research fellow at the Free University of Berlin. Previously I have worked at the Perimeter Institute and the Institute for Quantum Computing, department of Combinatorics and Optimization at the University of Waterloo. I completed my PhD at the University of Sydney in 2020.

I am interested in the computational power of quantum systems. Recently, I have been focusing on how noise affects computational power and alternate paths to fault tolerance. Drawing on insights from quantum foundations and theoretical computer science, I continue to investigate the resources for quantum computational power and how these interact with each other. My work on the classical simulation of quantum circuits and proofs of the hardness of classical simulation aims to refine our understanding of the separation between the computational power of classical and quantum systems. My work on learning quantum systems explores the theoretical and practical limits of state learning.

PAPERS: Classical vs Quantum

_edited.jpg)

Hakop Pashayan, Joel J. Wallman, and Stephen D. Bartlett

Phys. Rev. Lett. 115, 070501 (2015)

100+ citations

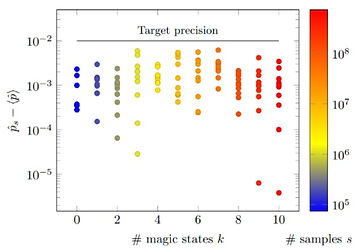

We present a method for estimating the probabilities of outcomes of a quantum circuit using Monte Carlo sampling techniques applied to a quasiprobability representation. Our estimate converges to the true quantum probability at a rate determined by the total negativity in the circuit, using a measure of negativity based on the 1-norm of the quasiprobability. If the negativity grows at most polynomially in the size of the circuit, our estimator converges efficiently. These results highlight the role of negativity as a measure of nonclassical resources in quantum computation.

_edited.jpg)

_edited.jpg)

Hakop Pashayan, Stephen D. Bartlett, and David Gross

Quantum 4, 223 (2020)

Invited talk at TQC2018

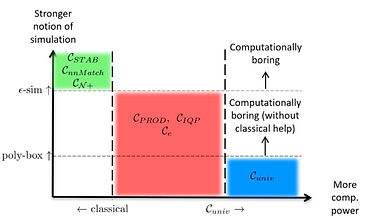

Investigating the classical simulability of quantum circuits provides a promising avenue towards understanding the computational power of quantum systems. Whether a class of quantum circuits can be efficiently simulated with a probabilistic classical computer, or is provably hard to simulate, depends quite critically on the precise notion of ``classical simulation'' and in particular on the required accuracy. We argue that a notion of classical simulation, which we call EPSILON-simulation (or ϵ-simulation for short), captures the essence of possessing ``equivalent computational power'' as the quantum system it simulates: It is statistically impossible to distinguish an agent with access to an ϵ-simulator from one possessing the simulated quantum system. We relate ϵ-simulation to various alternative notions of simulation predominantly focusing on a simulator we call a poly-box. A poly-box outputs 1/poly precision additive estimates of Born probabilities and marginals. This notion of simulation has gained prominence through a number of recent simulability results. Accepting some plausible computational theoretic assumptions, we show that ϵ-simulation is strictly stronger than a poly-box by showing that IQP circuits and unconditioned magic-state injected Clifford circuits are both hard to ϵ-simulate and yet admit a poly-box. In contrast, we also show that these two notions are equivalent under an additional assumption on the sparsity of the output distribution (poly-sparsity).

Hakop Pashayan, Oliver Reardon-Smith, Kamil Korzekwa, and Stephen D Bartlett

PRX Quantum 3, 020361 (2022)

Contributed talk at QIP2021

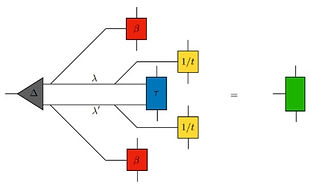

We present two classical algorithms for the simulation of universal quantum circuits on n qubits constructed from c instances of Clifford gates and t arbitrary-angle Z-rotation gates such as T gates. Our algorithms complement each other by performing best in different parameter regimes. The ESTIMATE algorithm produces an additive precision estimate of the Born rule probability of a chosen measurement outcome with the only source of run-time inefficiency being a linear dependence on the stabilizer extent (which scales like 1.17^t for T gates). Our algorithm is state-of-the-art for this task: as an example, in approximately 25 hours (on a standard desktop computer), we estimated the Born rule probability to within an additive error of 0.03, for a 50 qubit, 60 non-Clifford gate quantum circuit with more than 2000 Clifford gates. The COMPUTE algorithm calculates the probability of a chosen measurement outcome to machine precision with run-time O(2^tr (t-r)t) where r is an efficiently computable, circuit-specific quantity. With high probability, r is very close to min{t,n-w} for random circuits with many Clifford gates, where w is the number of measured qubits. COMPUTE can be effective in surprisingly challenging parameter regimes, eg, we can randomly sample Clifford+T circuits with , n=55, w=5, c=10^5 and t=80 T-gates, and then compute the Born rule probability with a run-time consistently less than 10^4 seconds using a single core of a standard desktop computer. We provide a C+ Python implementation of our algorithms.

_edited_edited.jpg)

Hammam Qassim, Hakop Pashayan, and David Gosset

Quantum 5, 606 (2021)

Contributed talk at TQC2022

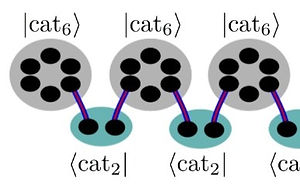

In this work we improve the runtime of recent classical algorithms for strong simulation of quantum circuits composed of Clifford and T gates. The improvement is obtained by establishing a new upper bound on the stabilizer rank of m copies of the magic state |T> in the limit of large

m. In particular, we show that m copies of |T> an be exactly expressed as a superposition of at most O(2^αm) stabilizer states, where α≤0.3963, improving on the best previously known bound α≤0.463. This furnishes, via known techniques, a classical algorithm which approximates output probabilities of an n-qubit Clifford + T circuit U with m uses of the T gate to within a given inverse polynomial relative error using a runtime poly(n,m) 2^αm. We also provide improved upper bounds on the stabilizer rank of symmetric product states more generally; as a consequence we obtain a strong simulation algorithm for circuits consisting of Clifford gates and m instances of any (fixed) single-qubit Z-rotation gate with runtime poly(n,m) 2^0.5m. We suggest a method to further improve the upper bounds by constructing linear codes with certain properties.

_edited.jpg)

James R Seddon, Bartosz Regula, Hakop Pashayan, Yingkai Ouyang, Earl T Campbell

PRX Quantum 2, 010345 (2021)

Talk at TQC2020

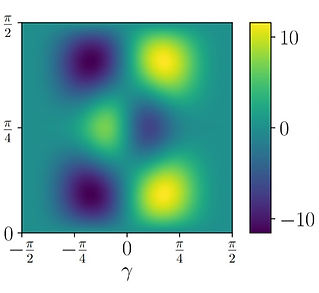

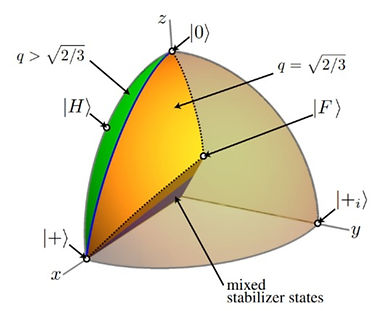

Consumption of magic states promotes the stabilizer model of computation to universal quantum computation. Here, we propose three different classical algorithms for simulating such universal quantum circuits. Our first simulator introduces a new class of quasiprobability distributions and connects its runtime to a generalized notion of negativity. We prove that this algorithm is exponentially faster than all prior quasiprobability simulators for qubits. Our second simulator is a new variant of the stabilizer-rank simulation algorithm, extended to work with mixed states and with significantly improved runtime bounds. Our third simulator trades precision for speed by discarding negative quasiprobabilities. We connect each algorithm’s performance to a corresponding magic monotone and, by comprehensively characterizing the monotones, we obtain a precise understanding of the simulation runtime and error bounds. Our analysis reveals a deep connection between all three seemingly unrelated simulation techniques and their associated monotones. For tensor products of single-qubit states, we prove that our monotones are all equal to each other, multiplicative and efficiently computable, allowing us to make clear-cut comparisons of the simulators’ performance scaling. Furthermore, our monotones establish several asymptotic and non-asymptotic bounds on state interconversion and distillation rates. Beyond the theory of magic states, our classical simulators can be adapted to other resource theories under certain axioms, which we demonstrate through an explicit application to the theory of quantum coherence.

PAPERS: Learning

_edited.jpg)

Christian Bertoni, Jonas Haferkamp, Marcel Hinsche, Marios Ioannou, Jens Eisert, Hakop Pashayan

Phys. Rev. Lett. - Accepted 17 May 2024

arXiv:2209.12924 [quant-ph] (2022)

Contributed talk at TQC2023

We provide practical and powerful schemes for learning many properties of an unknown n-qubit quantum state using a sparing number of copies of the state. Specifically, we present a depth-modulated randomized measurement scheme that interpolates between two known classical shadows schemes based on random Pauli measurements and random Clifford measurements. These can be seen within our scheme as the special cases of zero and infinite depth, respectively. We focus on the regime where depth scales logarithmically in n and provide evidence that this retains the desirable properties of both extremal schemes whilst, in contrast to the random Clifford scheme, also being experimentally feasible. We present methods for two key tasks; estimating expectation values of certain observables from generated classical shadows and, computing upper bounds on the depth-modulated shadow norm, thus providing rigorous guarantees on the accuracy of the output estimates. We consider observables that can be written as a linear combination of poly(n) Paulis and observables that can be written as a low bond dimension matrix product operator. For the former class of observables both tasks are solved efficiently in n. For the latter class, we do not guarantee efficiency but present a method that works in practice; by variationally computing a heralded approximate inverses of a tensor network that can then be used for efficiently executing both these tasks.

_edited.jpg)

Daniel Grier, Hakop Pashayan, Luke Schaeffer

arXiv:2211.11810 [quant-ph] (2022)

Contributed talk at TQC2022

We consider the classical shadows task for pure states in the setting of both joint and independent measurements. The task is to measure few copies of an unknown pure state ρ in order to learn a classical description which suffices to later estimate expectation values of observables. Specifically, the goal is to approximate Tr(Oρ) for any Hermitian observable O to within additive error ϵ provided Tr(O^2)≤B and ∥O∥=1. Our main result applies to the joint measurement setting, where we show Θ(√B/ϵ+1/ϵ^2) samples of ρ are necessary and sufficient to succeed with high probability. The upper bound is a quadratic improvement on the previous best sample complexity known for this problem. For the lower bound, we see that the bottleneck is not how fast we can learn the state but rather how much any classical description of ρ can be compressed for observable estimation. In the independent measurement setting, we show that O(√(Bd)/ϵ+1/ϵ^2) samples suffice. Notably, this implies that the random Clifford measurements algorithm of Huang, Kueng, and Preskill, which is sample-optimal for mixed states, is not optimal for pure states. Interestingly, our result also uses the same random Clifford measurements but employs a different estimator.

_edited.jpg)

PRINCIPAL EIGENSTATE CLASSICAL SHADOWS

Daniel Grier, Hakop Pashayan, Luke Schaeffer

Proceedings of 37th Annual Conference on Learning Theory (COLT 2024)

arXiv:2405.13939 [quant-ph] (2024)

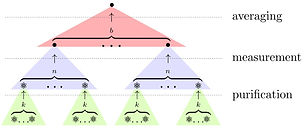

Given many copies of an unknown quantum state ρ, we introduce the task of learning a classical description of its principal eigenstate. Namely, assuming that ρ has an eigenstate |φ⟩ with eigenvalue λ > 1/2, the goal is to learn a (classical shadows style) classical description

of |φ⟩ which can later be used to estimate expectation values ⟨φ|O|φ⟩ for any O in some class of observables. We argue that learning the principal eigenstate is the more natural setting for many applications of classical shadows, and in fact, can also lead to a reduction in sample

complexity. We give a sample-efficient algorithm for this task that compares favorably to the naive approach of applying quantum state purification followed by the single-copy classical shadows scheme. In fact, in the regime where λ is sufficiently large, the sample complexity

of our algorithm matches the optimal sample complexity for pure state classical shadows.

PAPERS: Characterization and Verification

_edited.jpg)

Athena Ceasura, Pavithran Iyer, Joel J. Wallman, Hakop Pashayan

arXiv:2212.05488 [quant-ph] (2022)

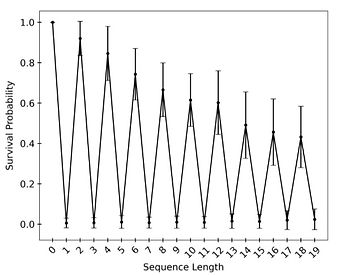

We construct a gate and time-independent noise model that results in the output of a logical randomized benchmarking protocol oscillating rather than decaying exponentially. To illustrate our idea, we first construct an example in standard randomized benchmarking where we assume the existence of ``hidden'' qubits, permitting a choice of representation of the Clifford group that contains multiplicities. We use the multiplicities to, with each gate application, update a hidden memory of the gate history that we use to circumvent theorems which guarantee the output decays exponentially. In our focal setting of logical randomized benchmarking, we show that the presence of machinery associated with the implementation of quantum error correction can facilitate non-exponential decay. Since, in logical randomized benchmarking, the role of the hidden qubits is assigned to the syndrome qubits used in error correction and these are strongly coupled to the logical qubits via a decoder.

PAPERS: Quantum Foundations

_edited.jpg)

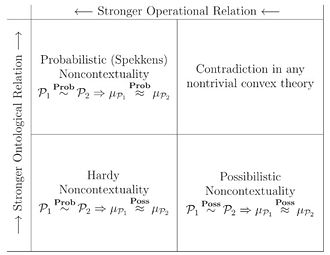

Andrew W Simmons, Joel J Wallman, Hakop Pashayan, Stephen D Bartlett, Terry Rudolph

New Journal of Physics, 19 (3), 033030 (2017)

The presence of contextuality in quantum theory was first highlighted by Bell, Kochen and Specker, who discovered that for quantum systems of three or more dimensions, measurements could not be viewed as deterministically revealing pre-existing properties of the system. More precisely, no model can assign deterministic outcomes to the projectors of a quantum measurement in a way that depends only on the projector and not the context (the full set of projectors) in which it appeared, despite the fact that the Born rule probabilities associated with projectors are independent of the context. A more general, operational definition of contextuality introduced by Spekkens, which we will term ‘probabilistic contextuality’, drops the assumption of determinism and allows for operations other than measurements to be considered contextual. Even two-dimensional quantum mechanics can be shown to be contextual under this generalised notion. Probabilistic noncontextuality represents the postulate that elements of an operational theory that cannot be distinguished from each other based on the statistics of arbitrarily many repeated experiments(they give rise to the same operational probabilities) are ontologically identical. In this paper, we introduce a framework that enables us to distinguish between different noncontextuality assumptions in terms of the relationships between the ontological representations of objects in the theory given a certain relation between their operational representations. This framework can be used to motivate and define a ‘possibilistic’ analogue, encapsulating the idea that elements of an operational theory that cannot be unambiguously distinguished operationally can also not be unambiguously distinguished ontologically. We then prove that possibilistic noncontextuality is equivalent to an alternative notion of noncontextuality proposed by Hardy. Finally, we demonstrate that these weaker noncontextuality assumptions are sufficient to prove alternative versions of known ‘no-go’ theorems that constrain ψ-epistemic models for quantum mechanics.

SEMINARS

In 2020/21, co-organised the IQC Computer Science seminar and the IQC-QuICS Math and CS seminars.

This virtual seminar is jointly sponsored by Institute for Quantum Computing (University of Waterloo) and the Joint Center for Quantum Information and Computer Science (University of Maryland). We are interested in understanding the theoretical tools that underlie current results in quantum information, especially insofar as they overlap with mathematics and theoretical computer science. Talks are 50 minutes long, with time for Q&A and discussion.

Co-organised with Carl Miller, Yusuf Alnawakhtha and Daniel Grier.